Web-based MIDI Editor and Synthesizer

I'd been exploring the WebAudio API and the various things that people have made with it. I was blown away by awesome demos such as this Karplus-Strong synthesis demo and this Collection of WebAudio effects, and I wanted to try it out for myself.

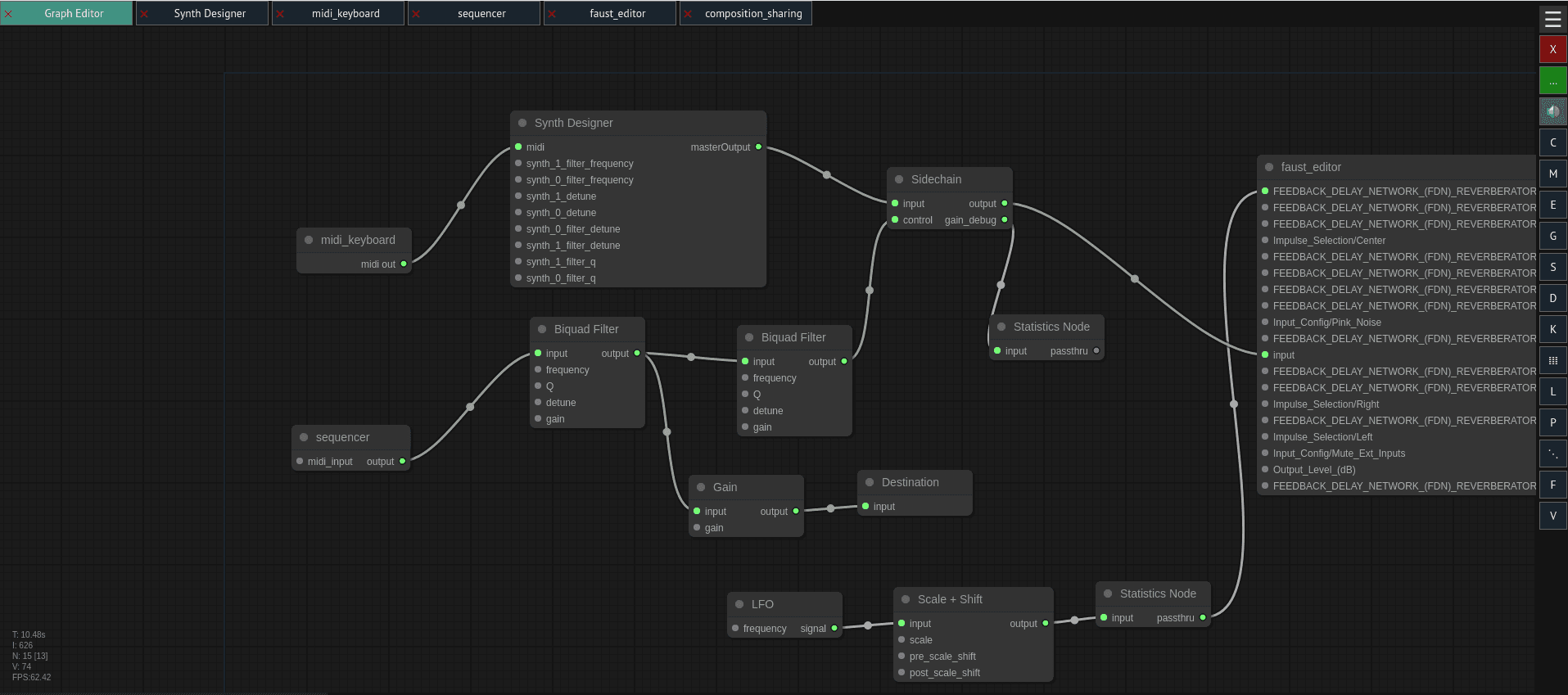

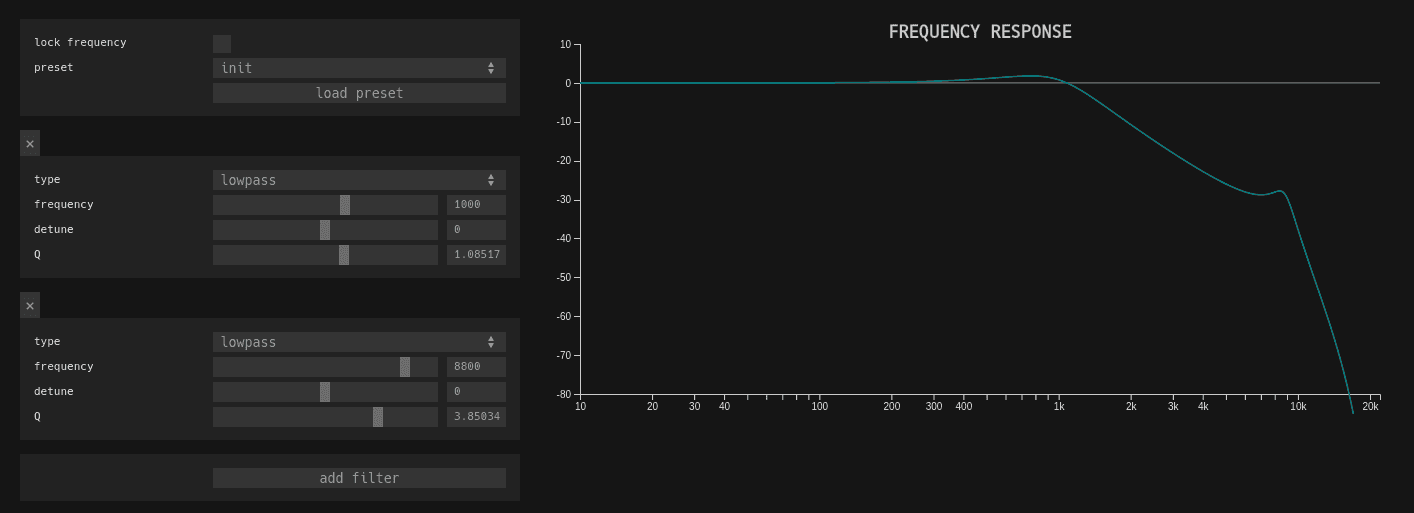

This project started out as a web-based MIDI editor with a very simple synthesizer using existing audio WebAudio-based libraries. Since then, it has grown into an extensive collection of modules covering a wide range of functionality including several types of synthesizers, audio effects, noise generators, sequencers, audio visualizations and analyzers, a sample library, and audio graph management to name some.

The project is still under very active development, and the best place to go for an up-to-date overview and demo is the Github repository: https://github.com/ameobea/web-synth

Related Projects

Web synth is a collection of connected modules and sub-projects that all run in the web browser by using WebAudio. While working on it, there have been a couple of pieces that have grown to become more full-fledged projects themselves.

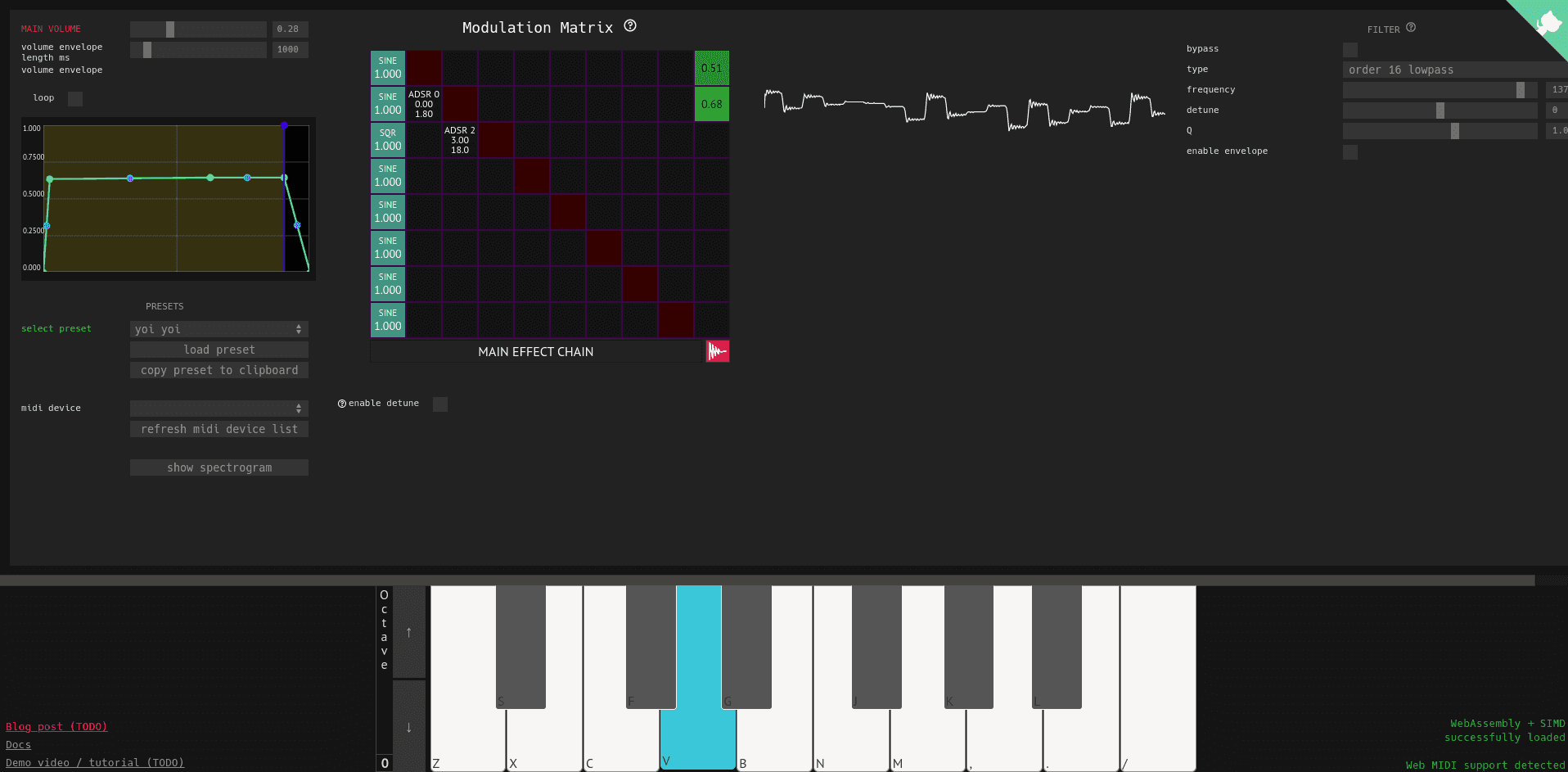

FM Synthesizer

Try it yourself: https://notes.ameo.design/fm.html

While experimenting with FM synthesis, I ended up creating a pretty capable FM synthesizer. It's integrated into web synth allowing it to interoperate with all of the modules and exist as a fully controllable part of the audio graph. In addition, I created a standalone page for it with a more minimal UI focused on showing off its capabilities with a variety of presets and making it easier for people to try it out without having to spend time learning how to use the full web synth platform.

Docs + Audio Programming / DSP Notes

I had the goal of creating a docs website for web synth and related materials as well as keep track of things I learned and discovered as I started to get deeper into audio programming and DSP development. I discovered a tool called Foam which is a note-taking and knowledgebase generator that uses VS Code and generates static HTML from markdown.

Since creating it, I've been steadily adding to it and expanding it with both usage guides and documentation for web synth as well as random notes. Check it out here: https://notes.ameo.design/docs